In the web development world, there’s always some new tool trending. Its proponents would sing its praise over the current generation of tooling, triggering quite a bit of FOMO in web developers.

On the other side, web dev veterans would often counter with a something like “we achieved the same thing long ago, but simpler, cheaper, and without all the hoopla”.

This happens again and again, for a simple reason: the basic problem remains the same.

The challenge

First, you want the content that is the same for everyone to be served as fast as possible, with the minimal number of moving parts that might make it fail or slow down. If you can avoid a call to the web server to render this content afresh, that’s great.

Then, you also want the content that’s not the same for everyone to be served as fast as possible - without delaying the static parts, or breaking the whole page if it fails, or causing flicker and layout shift when it arrives.

And of course, having in effect two mechanisms needed to render a full page - one for the static parts, another for the dynamic bits, means more code to write, manage and reason about.

Last but not least, the “static” part is almost never truly static. Content teams want to make changes, preview them and then launch them, without frustrating delays. In more technical terms, the system should support invalidating and re-generating content at will - without needing an ever-longer build time as your site grows.

If you’ve been doing web dev for a while, you can probably recognize where each approach lands (and where it falls short): Server Side Rendering (SSR) on one side, Static Site Generators (SSG) on the other, and the hybrid approach of Incremental Static Regeneration (ISR) acting as a middle-ground.

Out of all this commotion, there’s a pattern that has recently gained momentum:

- The tooling you use should let you choose whether a piece of shared content should be rendered at build time, or just-in-time when the first request comes for it. It should not force you to settle on the same approach for all your content.

- Once rendered, the CDN should cache the content and serve it quickly - but allow invalidating any content as needed. Rather than periodically invalidating all content by setting a Time-To-Live (TTL), it’s way more efficient to instruct the CDN to invalidate just the specific URLs that needs refreshing, immediately after the underlying content changes have been published at the source.

- Lastly, it’s best if users don’t experience slowdowns as content is being regenerated. This is what the

stale-while-revalidatedirective (a.k.a SWR) is for.

If you follow the pattern above, then no pages are truly static. You’re able to pre-render some content and have it efficiently cached, or you could generate pages incrementally as a given resource is first accessed. In both cases, you can always trigger a cache purge to make it re-render.

So, how can developers get to use this pattern?

It’s not (just) the framework

Many developers would focus first on choosing the right web framework, and it’s easy to see why.

A good framework can do much of the heavy-lifting for you, and provide recipes for how and when to use each rendering and revalidation scenario. And of course, your choice of framework will greatly affect your developer experience. You want to settle on a framework that works with you, not find yourself fighting against it.

You may choose a framework that gives you full control over your caching patterns, such as Astro or Remix. Or you may choose a framework such as Next.js, which takes over more of the low-level work at the cost of losing some control. Some frameworks, such as Nuxt, support both modes.

But there is another big component to consider - one that’s easy to miss:

The platform your code is running on.

Here’s why: serving pages is the fastest when done by a CDN, and so the framework depends on the CDN for providing all fine-grained controls over what to cache, for whom, when to revalidate, and how to make sure the end-user experience is not slowed down during that revalidation.

In fact, the more frameworks are moving towards advanced caching functionality, the more they depend on the underlying platform for an optimal implementation.

Features you want in your CDN

Here are a few of the non-trivial features that frameworks need modern CDNs to provide:

- Strong on-demand revalidation. When a piece of content is updated in your backend (say, a product X has an updated price), you should not have to figure out all page URLs that should be revalidated, but instead use cache tags to easily revalidate them all at once.

- Efficient SWR, so that when a major piece of content revalidates (say, your homepage!), it would take place in the background once for all users. If your homepage was invalidated “naively”, and then get a ton of requests across the globe, you might end up with multiple concurrent requests to the SSR function. This is not just wasteful, but can also slow down your backend, eat into your DB/CMS quotas, and worse.

- Control over cache key granularity via the Vary header is a lesser-known but often surprisingly critical need. A request to the same URL might return different responses based on query parameters, the user’s language, their cookies, etc. Without control over cache granularity, you would either break correctness (by caching different responses under the same key), or break cache hit rates (by never caching a page because of some URL query parameter value with high cardinality, that doesn’t actually affect the response). Very often, the latter happens: developers assume they have a great cache hit rate when actually it’s very low - because lacking more information, the CDN takes a conservative approach.

Of course, all of the above features should work seamlessly together, without gotchas.

The reality is, there is almost no platform that provides developers (and the frameworks they use) with all that functionality.

This is why you would find that some features - even basic SSR - are not enabled by default by frameworks. For example, Astro & Nuxt require platform-specific adapters for SSR, each adapter having its own notes & caveats.

There is nothing wrong with the framework itself! it is simply that providing such functionality out-of-the-box depends heavily on the platform.

With Next.js, platform support is even more fragmented. To the best of my knowledge, only one platform (besides the framework authors’ own) supports all of Next.js rendering features, including on-demand revalidation, PPR, etc. Unsurprisingly, that is Netlify (see tests).

It’s important to note: the Netlify platform itself is framework-agnostic, and so it will continue to be.

Our approach is to build the necessary platform primitives first, then do our best to let you easily utilize them via common web frameworks. That’s also why we love it when frameworks let you go beyond their out-of-the-box tooling and tweak their behavior to your needs.

Great, so if Netlify has it all, what’s missing?

How Durable Cache fits in

If there’s an underlying message to this post, it is this: achieving performant serving of up-to-date content takes some serious infra, much of it often hidden from view.

A modern-day CDN should not just provide a correct implementation, it should also be ever-more-optimized for the use cases users want. What worked well for static content or simple dynamic features is not enough nowadays.

Case in point: when a website visitor requests a static asset from Netlify, the specific CDN edge node they have connected to would check its local cache first. If the asset is not there, the node would contact our internal store of static assets to get it. No CDN in the world loads all content on all nodes - it doesn’t scale, and would have been prohibitively expensive.

In classic CDN terms, that internal store serves as the origin. But unlike classic systems, our origin is an implicit component of deploying to Netlify that you don’t need to configure or even think about.

Of course, communications between the edge node and that origin incurs some added latency, but that latency is well-controlled and monitored by us. What happens, though, when that origin call is actually invoking a serverless function to render a page or return an API response?

In that case, there are many more factors contributing to added latency: cold starts, rendering performance of the site’s own code, third-party API calls (which bring their own latency, quotas, etc.). Only a subset of these factors are under Netlify’s control.

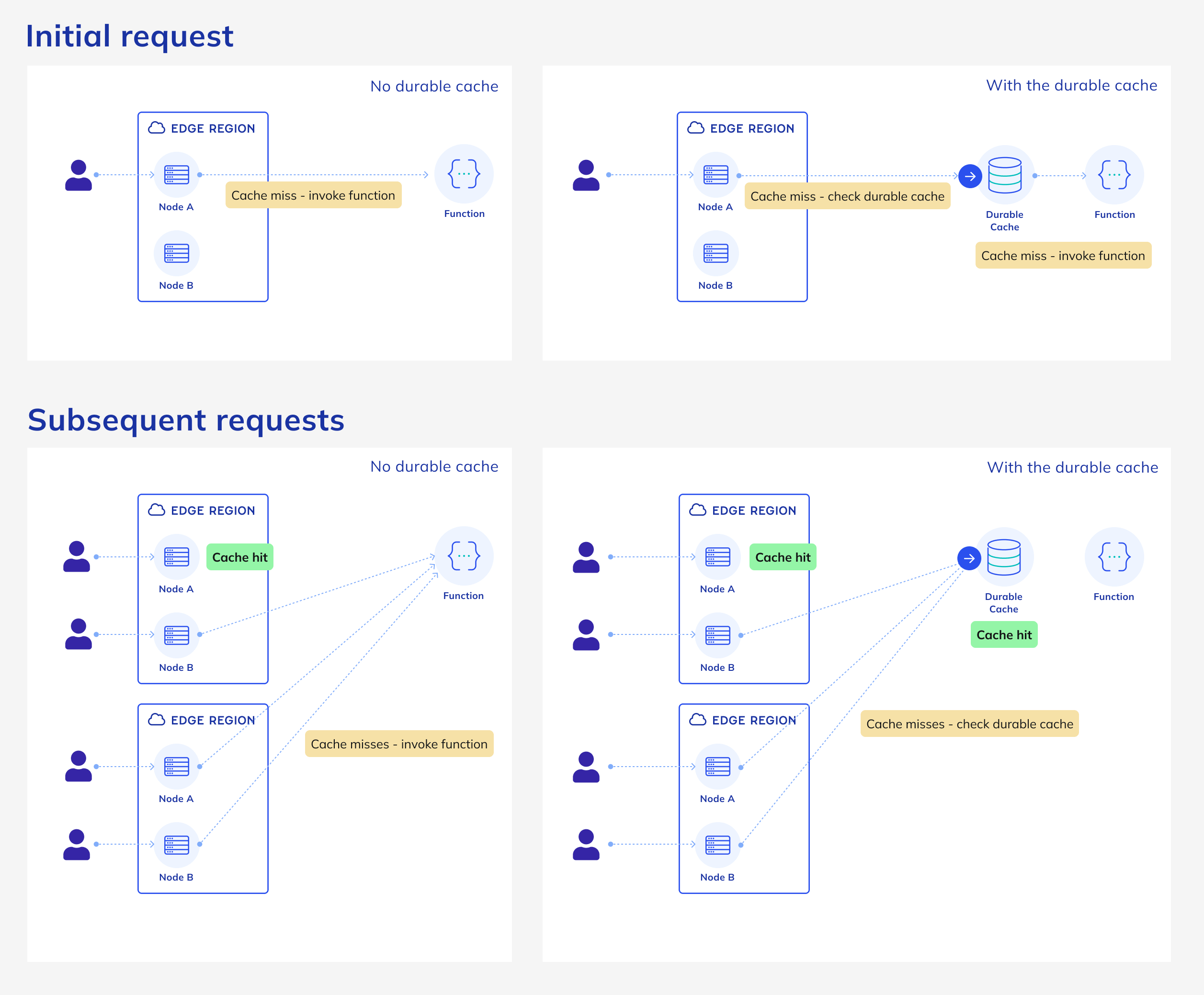

If each edge node would invoke serverless functions for any dynamic content it doesn’t have locally cached, that potentially means a lot of function calls - each having its own latency plus potentially slowing down other concurrent requests. Most of these calls would be redundant, and could be optimized away with an intermediate caching feature.

Durable Cache is exactly that mechanism.

It holds cacheable responses from serverless functions at an intermediate layer, so that all edge nodes can share the same cached content.

Additionally, if you revalidate a high-traffic webpage that was marked stale-while-revalidate, the mechanism would de-duplicate all concurrent requests to that page that would immediately follow, and generate the fresh page only once.

Marking a given function response to be stored in the Durable Cache is now opt-in via a new directive in the cache-control response header, aptly named durable (see docs). The feature is available now, for all plans, at no extra cost.

You can use this new directive today with frameworks such as Astro and Remix, and we are gradually rolling out-of-the-box usage of it within our Next.js Runtime v5.

The next frontier

Building fast, on-demand personalized content isn’t solved just by platform-level caching improvements, either.

For the easiest mix between static and non-static content, the framework should step in and provide an abstraction which lets developers include both types in the same page with minimal boilerplate, code duplication, managing of fetch requests, etc.

This is exactly the point of the new experimental feature in Astro: Server Islands.

True to Astro’s usual approach, it is straightforward and cache-friendly. You can combine it with all of Netlify’s platform features for a fast solution that works just the way you need it. You can try it on Netlify today.